Where is “The Edge” and why does it matter?

Key takeaways:

- The Edge is an optimization problem - It is not a place and not a single technology.

- There are several factors to consider when deciding whether and what kind of distributed computing technology to use in pursuit of solving a business problem.

- The ability to support a spectrum of edge and cloud deployment options is a valuable capability in considering which vendors to work with.

The Edge is not a place – It is an optimization problem. Edge computing is about doing the right things in the right places. As with all optimization problems, getting to the “right” answer requires considering a number of tradeoffs that are specific to your situation and then applying the right technology to maximize the benefits for the cost you are willing to pay.

Terms and technology

Part of what makes Edge confusing is that definitions of “The Edge” tend to focus on technologies rather than on use cases. Since use cases span a very wide range of requirements and the boundaries between those use cases don’t map directly to technologies, definitions in terms of technology can be difficult to use. That said, here is a framework that I find helpful.

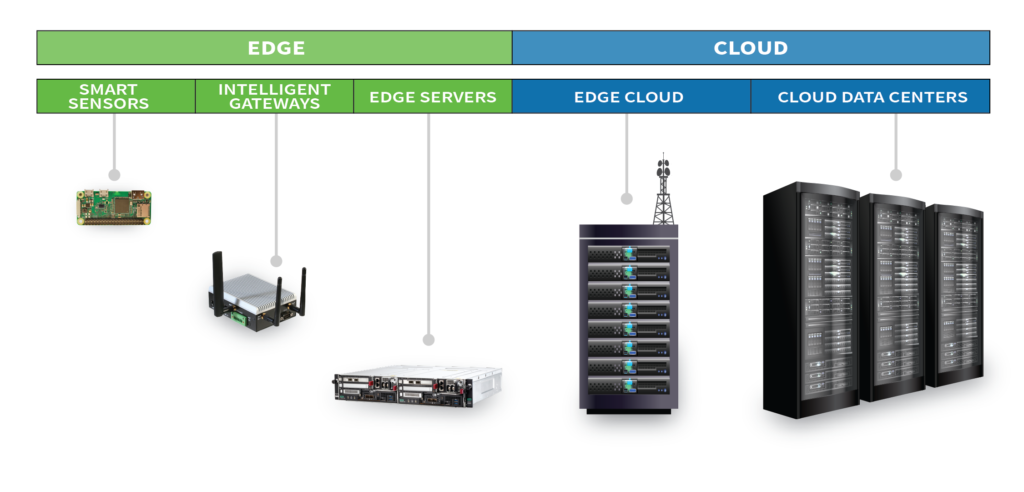

The basic idea behind edge computing is that the closer the compute hardware is to the equipment generating data, the closer to “The Edge” it is. Broadly speaking, computing domains can be divided into edge and cloud – that is, local to your network and outside of your network. Within each domain, options can be further subdivided:

- Cloud – outside of your local area network

- Cloud data centers – large facilities which host computing, storage and networking resources in a central location. In general, this puts a large distance between data generators and compute resources.

- Edge cloud – smaller versions of data centers which are collocated with wireless transmission towers. This puts a minimum distance between cellular WAN (Wide Area Network) or LPWAN (Low Power WAN) receivers and compute resources. This also includes distributed cloud data centers which are strategically located to minimize distance from customer access points.

- Edge – inside your local area network

- Edge servers – smaller footprint but general high-performance servers that are on premise and may or may not be close to actual equipment. There are a range placement options (from local data center to plant floor) necessitating a range of ruggedization options.

- Intelligent gateways – lower performance, very small footprint, general purpose computers that are usually placed close to the equipment that they connect to. These are frequently on the plant floor or in the field and need to be ruggedized.

- Smart sensors – special purpose, low-power, often microcontroller-based compute dedicated to data collection or processing for a specific sensor or device.

Plants will generally have a mix of compute capabilities that serve different purposes so expect to see some or all of these deployment options in any facility that you work with. The important point is that there is a range of capabilities and that those can be grouped, roughly, into categories. There is nothing inherently superior about any of the options. Rather, the choice of which one(s) to use depends on what you want to achieve.

Edge vs Cloud – Optimizing for constraints

Edge computing is important because it allows you to achieve certain performance that cloud computing cannot. Likewise, cloud computing is good at certain things which edge computing is not. Therefore it is important to consider the problem you’re trying to solve in order to select the right approach. The table below outlines some key areas to consider and under what conditions edge or cloud might be superior.

Long story short, Edge is a good choice for applications which need results VERY quickly (low 10s of milliseconds), that generate significant amounts of data in locations that have intermittent, low-bandwidth or high-cost connections and that don’t require extremely complex calculations. Cloud-based infrastructure is better for almost everything else if not in performance, then in procurement cost and operational complexity.

Where is your Edge?

An example can help make some of these factors more concrete and highlight how different aspects of equipment operations might be better served by different combinations of Edge and Cloud technologies.

A mining operator has a fleet of mobile drilling rigs which are deployed to various locations in a mine. They are concerned about three things: 1) Emissions compliance for the rig’s exhaust, 2) Signs of very near term equipment failure during operation and 3) Condition Based Maintenance (CBM) planning. The nature of the deployment means that the drill rigs are frequently outside of WiFi range of the rig storage facility. Cellular coverage is non-existent at this location. Constructing and deploying private cell towers is cost-prohibitive and wireless LPWAN technologies lack the bandwidth for these applications. The table below shows how one might consider which computing technologies best address these three needs.

Making your Edge work for you

Because The Edge is an optimization problem whose solution depends on the goals of the organization and the specifics of the questions being asked of the data, flexible deployment options are important when considering analytic solutions for your company. Falkonry understands this and has emphasized development in our Time Series AI platform to accommodate a range of capabilities supporting cloud and edge. The core of our software is designed to take advantage of cloud computing technologies and is readily deployed into a fully cloud environment. This supports both the high computational and storage loads required for model learning as well as the lower loads of inference in a well-connected environment. We also have Edge Analyzers. These are lightweight, containerized instances of the Falkonry inference engine that can run on smaller footprint compute hardware, such as gateways or IPCs, and that can function entirely disconnected from the cloud. Finally, we have an air gap version of the entire suite which packages the full capabilities of the software into a self-contained “cloud in a box.” In this configuration, functions can be deployed entirely behind firewalls or in environments where internet connectivity is not available. Contact us to learn more about how we can help you find your Edge.

Note: A version of this article first appeared on Toolbox Tech