How deep learning model management with GPUs makes industrial automation AI real

Key takeaways:

- Conventional approaches to bespoke machine learning don’t scale well to account for the variety and complexity of use cases, requiring bespoke model creation.

- Monolithic deep learning models also do not scale for industrial troubleshooting, which requires a very large number of machine-learned models.

- Creating and operating a very large number of small models is a superior approach that can efficiently exploit older generation GPUs.

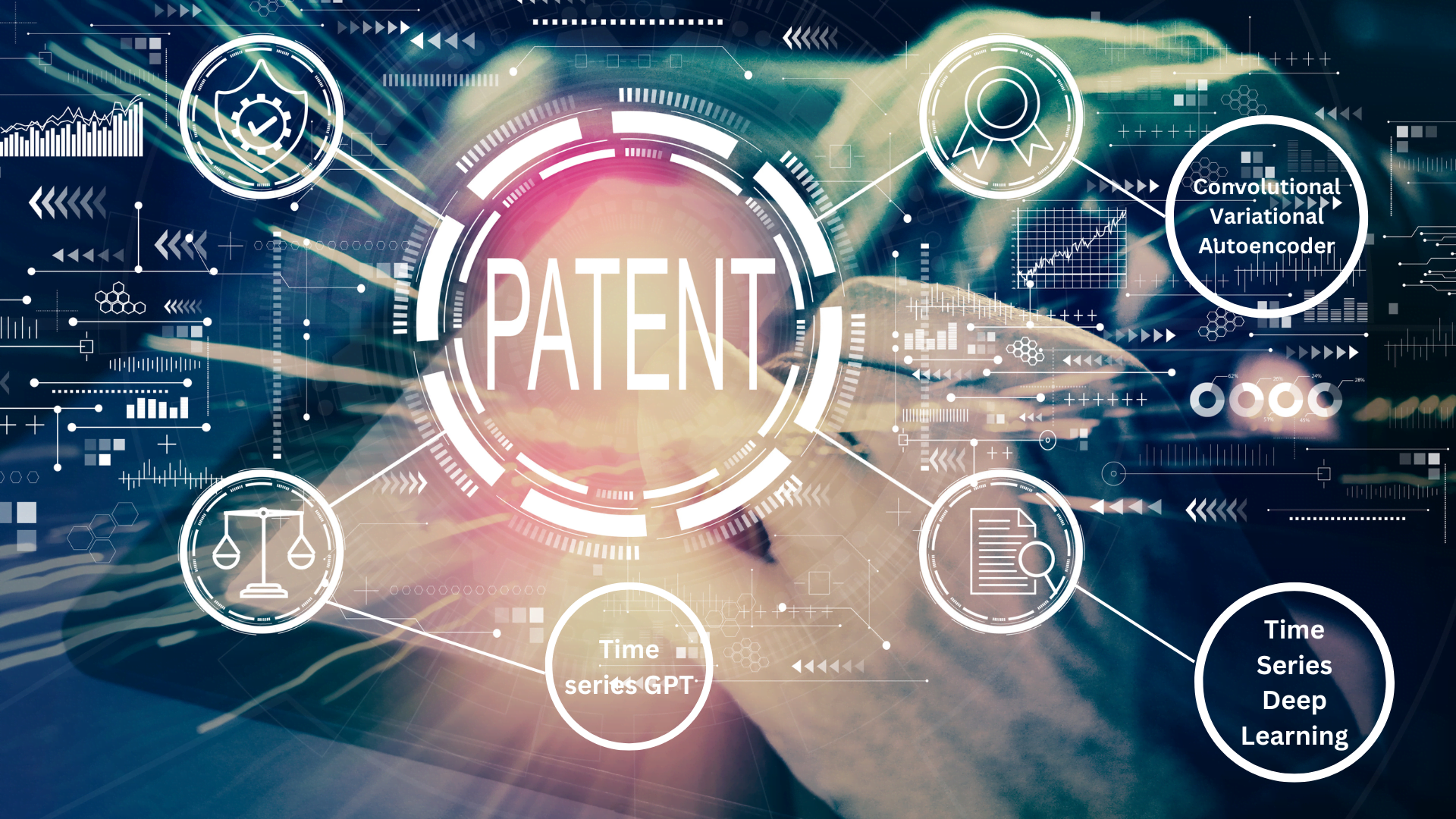

- Falkonry has patented this approach to offer factory-scale, automated anomaly detection for use directly by plant personnel.

Ever since researchers found that the matrix processing capabilities of GPUs could be used for workloads outside the realm of computer graphics, it has been difficult to imagine deep learning applications without some form of hardware acceleration. GPUs first made certain workloads possible by reducing their time and power requirements. This led to the image and language models of today with training datasets so large they were unthinkable just a few years ago. These models enable a whole slew of use cases ranging from on-device speech recognition to generative image models on the server side like DALL-E and Stable Diffusion. While this demonstrates the capabilities of deep learning models, it does so by throwing tons of data and compute at the problem. Unfortunately, it’s not an approach that works for industrial applications, as they tend to have a different dimension of complexity – variety – while still needing to be inexpensive for end users.

General models require a general domain, and while this holds true for language (as humans, we might disagree on semantics, but we still have a good shared understanding of how a particular language works) it is very much problematic for say, an internal combustion engine. Different engine models, combined with different use cases produce a matrix of interpretations which, in turn, require a prohibitive amount of data in order to adequately train models. Needless to say, when applied to a complex industrial facility, this is a requirement that an average AI project cannot fulfill. This holds true, especially in cases where the equipment might be exchanged on an annual basis or reconfigured on a daily basis. We believe the only way to address this challenge is to train a bespoke model for each piece of equipment but do so programmatically and in time to be useful. This is where we see a need and an opportunity for innovation in model and infrastructure design.

Model Design

An average factory floor in a modern production facility can have upwards of 5,000 sensors. If it takes 1 hour of GPU time to train 10 signals, it would require 500 GPU hours, nearly 3 weeks, just to train an initial version. That is obviously not a very scalable proposition, especially considering that training needs to be repeated when equipment gets replaced or reconfigured. To successfully train a model for each signal on a regular basis we have to ensure that the models are as small as possible. We were able to reduce our models to ~100k parameters each. This is a big breakthrough in the efficiency of deep learning technology compared to the baseline of 100s of millions of parameters for today’s average-to-large speech and language models.

This comes at a cost to the model’s ability to capture everything about the modeled signals, as the lower number of learned parameters can only encode so much information. However, it does allow us to detect and encode most of the important information contained in the sensor signals. In the realm of anomaly detection, this makes for some interesting use cases. For instance, if we measure the residual entropy that the system wasn’t able to encode, we can detect changes in the underlying physical system. To do this, we apply autoencoders which give us a measure of the system’s unpredictability (the entropy measure during the reconstruction of signal patterns). Then, we learn the range in which the level of entropy for individual components resides and watch for changes that indicate anomalies.

There are a number of clever ways to ensure this works well for varying use cases (which we will describe in-depth in another blog post), but for now, let’s address the challenge of deploying and training all of those models.

Infrastructure Design

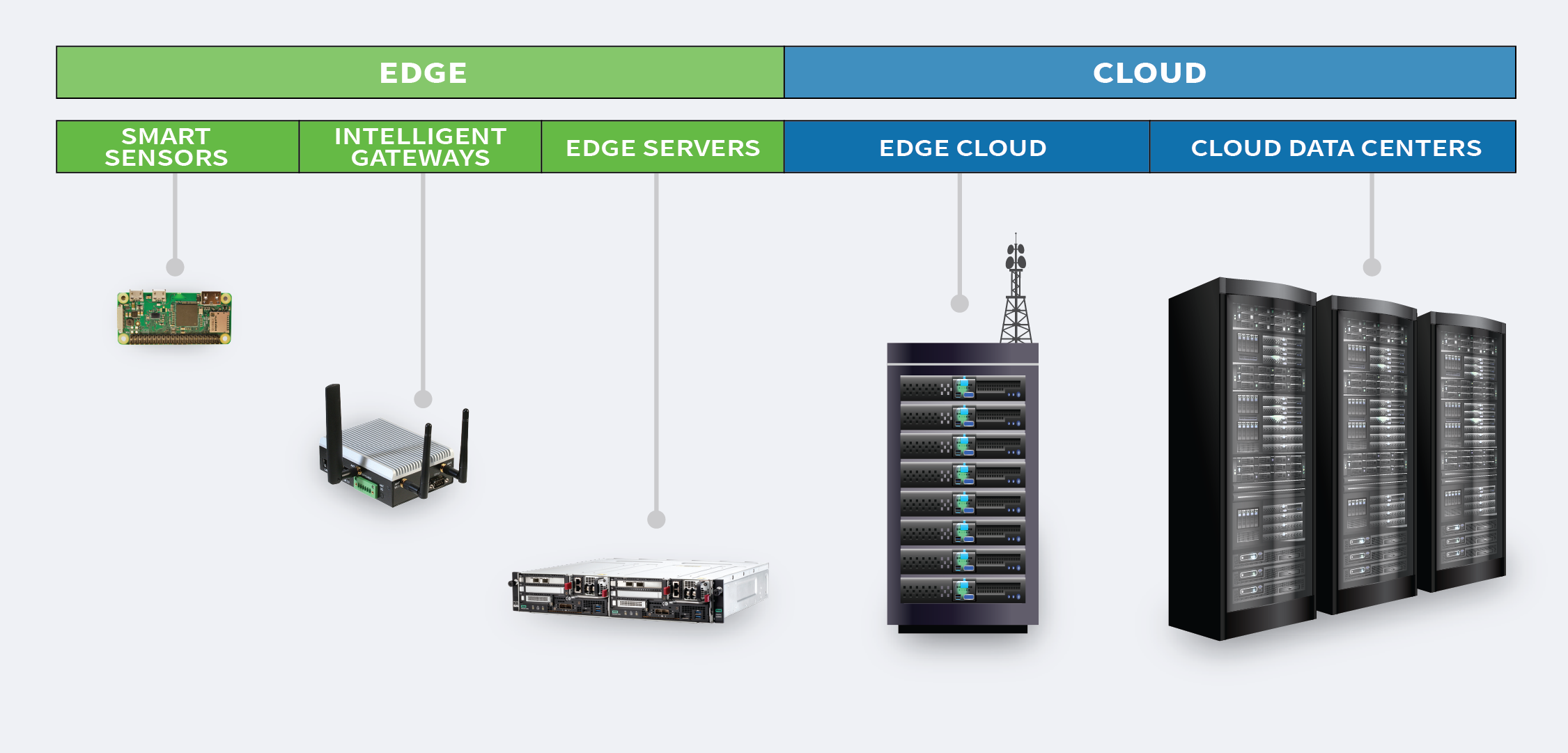

The production of AI models through training can be viewed as a kind of factory production. We have to standardize the production process in order to optimally use the available resources. Therefore, we apply a time-box approach to training where each iteration has a fixed time window in which to train. The fact that our models are small works to our advantage, as the number of samples we need to train the model is also quite small, around 2000-3000 data points (that’s less than an hour’s worth of data on a 1Hz sampling rate). Plateau detection is a further measure to drive down the training time, culminating in a speedy 3-4 minutes worth of training per signal (a single time series) on a GPU (typically NVIDIA’s T4s and A10s). On a CPU this would take significantly longer, in the range of 20 minutes while taking up 8 cores of a 3.2 GHz Xeon CPU. When we consider that it is possible to run over 10 simultaneous training sessions on a T4, we start to realize just how much efficiency a GPU-based infrastructure brings to the table. Training 5,000 signals for 5 minutes at a time requires 50 GPU hours vs. 208 CPU hours (we are assuming 64 core Xeons). If we distribute the load we end up with a turnaround time of 10 hours per model generation using 5 T4 GPUs or less than 4 hours when using 5 A10 GPUs (A10 has more memory and almost 4x the Cuda cores which allows for running more processes simultaneously). The resulting models are transferred to the inference servers which evaluate incoming sensor data looking for anomalies either in the cloud or on the edge and can be performed up to 100x more efficiently on GPUs than on CPUs

Utilizing this unique model training paradigm, we are further expanding the capabilities of Edge AI like never before. According to Gartner, Edge AI refers to the use of AI techniques embedded inside physical operations such as IoT. The adoption of Edge AI is the greatest in manufacturing use cases. Ultra-efficient GPUs at the edge are unlocking use cases which would have seemed impossible just a few years ago. Applying a machine learning model on thousands of sensor signals, which would be the scale of an industrial plant or a modern naval vessel, would have required considerable amounts of processing machinery consuming a lot of power, space, and cooling. GPU inference speeds are easily 3-5x above the most advanced CPUs for models like ours, and for larger models, this advantage only increases. Nowadays, a small box like an NVIDIA Jetson or Xavier can process 100s, if not thousands of signals in a form factor no bigger than a pint while consuming less than 50W of power. This enables an automated anomaly detection system right on the factory floor, and would reduce the amount of cloud processing required to run advanced root cause analyses or simply to archive the processed signals.

Application

The progress we’ve been experiencing in the realm of GPU technology has gotten us to the point where we no longer need to invest in acquiring and maintaining a vast computing infrastructure in order to benefit from AI in manufacturing. It is now possible to use off-the-shelf GPUs for training and inference and almost immediately start spotting anomalies and predicting conditions in a manufacturing process. The incremental learning and the unsupervised nature of learning anomaly detection eliminates the need for upfront labeling and modeling. This anomaly detection can start acting as a kind of “Spidey-Sense” for a factory, detecting changes in the physical configuration of the factory by monitoring discrepancies between the observed behavior and the expected behavior of the digital twin.

While DALL-E has over 10 billion parameters and GPT-3 well above 100 billion, we can model the behavior of a vibration sensor on a motor with less than a hundred thousand parameters. The difference in scale is similar to that of a mammal brain and the brain of a fruit fly. While an anomaly detection model like ours won’t be able to write an essay or paint a picture, it might be able to act as a sort of digital amygdala that can detect and signal danger or stress in different parts of the machinery. That is exactly the information a worker on the factory floor needs.