Data best practices for successful AI deployment

Key Takeaways

- AI does not need a large quantity of data or specialized big data infrastructure; rather it needs important use cases and smaller sets of good quality data.

- Ensuring adequate availability of sensor data, gathering discipline, and defining a clear purpose for utilization of collected data is important.

- Unavoidable data integrity issues require smart signal processing for streaming data

The application of time series AI to industrial operations can result in improvements in equipment availability, quality of production, and overall plant performance. However, even best-in-class manufacturers will find that there is room to improve outcomes further. The principle of GIGO (Garbage in, garbage out) dictates that the quality of the output is only as good as the quality of the input. This means that if the input signal or resulting data are suboptimal, the analytics layer can return suboptimal inferences. For example, a false positive can mean slowing production to perform unrequired maintenance, or a false negative can lead to unplanned downtime. If your data collection and utilization practices are streamlined, such situations can be avoided and you can extract this last mile benefit from AI deployment. Here are some guidelines or best practices around data that we’ve put together after working with our customers over the years.

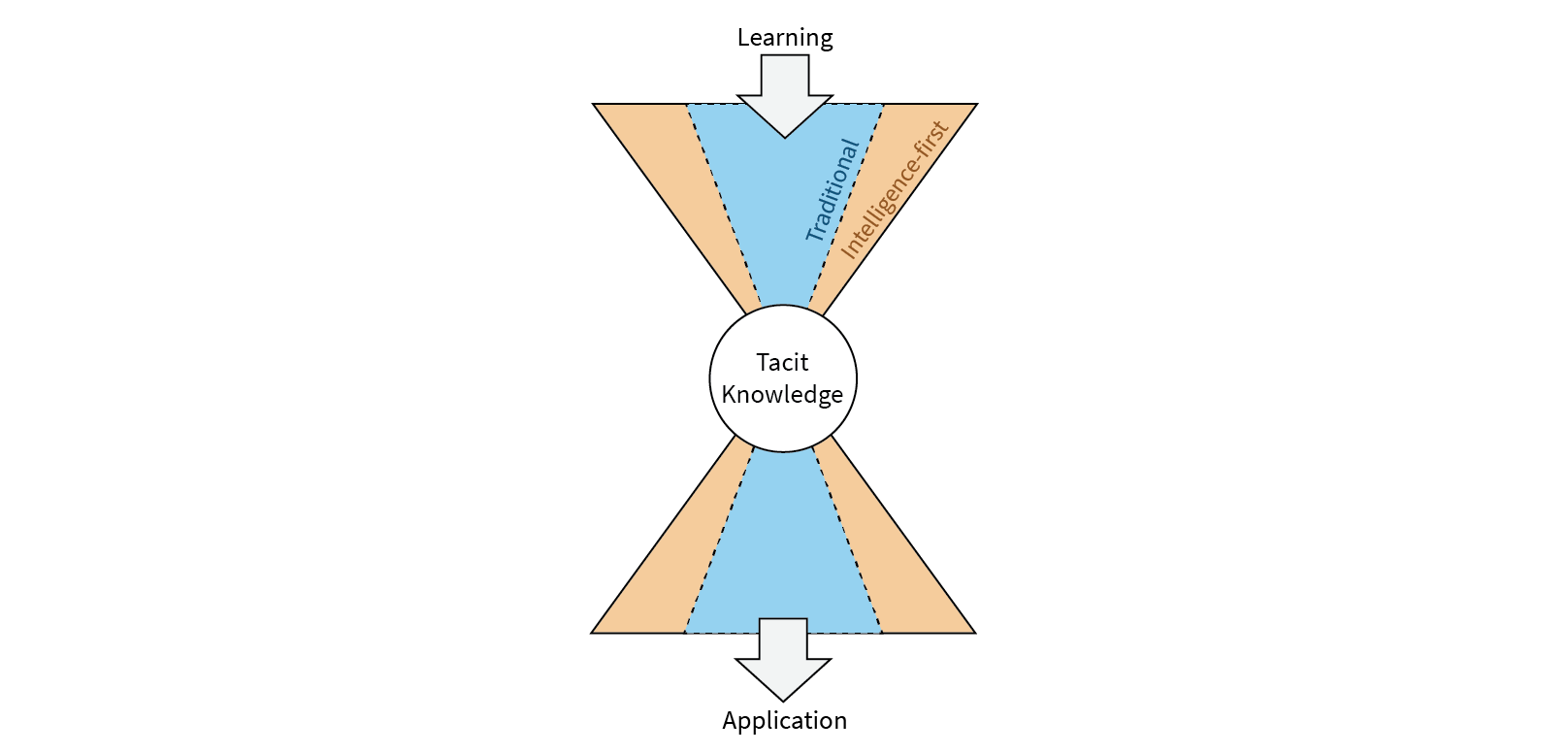

Have a clear purpose: Expenditure in data infrastructure such as Data Lakes without having first identified what problem needs to be solved and what data is really needed, usually ends up being wasteful. It often results in the sub-optimal realization of value from the collected data. The reason is that by the time the organization aligns on how the data is to be used, the data might be malformed or rendered unusable because it is no longer relevant, or that it lacks the context provided by the operating conditions of the plant which were not captured and therefore cannot be correlated with the data lake. A better approach is to discover relevant patterns and surface insights from today’s operational data by utilizing intelligence first practices and working in the now. As AI pioneer and educator Andrew Ng puts it, “start with a mission”.

Data gathering discipline is important: It often comes as a surprise that sensors are not operating properly or that details about the reasons for downtime are missing or not granular enough. The asset model of many EAM systems is shallow and as a result, the findings during repairs are not assigned to the root cause components. This makes it difficult to determine the “ground truth” that is needed for training AI models.

Use standardized tags: It isn’t uncommon for plants to change tag names over time. While name changes pose no real problem for post-event forensics, they can pose a significant data integrity issue to off-the-shelf real-time AI algorithms. Other data wrangling activities like column and row rationalization are not significant challenges for desktop analytics but require smart signal processing for self-supervised AI. [The Falkonry Time Series AI Platform is capable of alerting operational technology teams of unexpected tags or tag name changes automatically]

Ensure availability of adequate sensor data: When looking at “packaged equipment” it is often the case that only alarms and status alerts are available while the physical analog signals are missing or restricted within the equipment controls. Make sure that your equipment vendor can share the signal data needed. It is important to ask whether the sensor signals needed to characterize the problem being tackled are indeed available. [For a deeper understanding of signal integrity and sensor issues click here]

Commit the required resources for data upkeep: This does not mean that a staff data scientist is needed; in fact, a boots-on-the-ground reliability engineer or maintenance manager can ensure data quality is being maintained, events of interest are being properly and timely recorded, and that the conditions detected by AI are properly recorded and acted upon (if needed). These activities become less cumbersome with easy-to-use AI apps, however, there is a need to dedicate resources to provide continuous feedback to AI, in particular during model training and revisions. One must consider that AI is like a smart but fresh employee that needs to be groomed and cared for. Good housekeeping of the data will let the AI reach its full potential in delivering results.

Get the right kind of data: Signals for electromechanical conditions as well as process data must be considered. For example, in pump impeller failure detection, one may look for vibration or amperage signals to tell if the equipment is reaching a critical failure condition. However, adding the pressure and temperature of the upstream process may give an early indication of cavitation which is the reason why the impeller is eroding.

Let the data surprise you: If you follow all the above data best practices, a capable AI engine should be able to unearth all sorts of valuable information and facts from the data, that can be utilized to effect positive changes in operations that show benefits over time. It is important to not get tunnel-visioned with only looking for failure modes. One must think about questions such as: Is there something else that an AI-identified anomaly can mean? Is the sensor difference in value related to loss of calibration? Is there a product quality implication we are not seeing because we are focusing on predictive maintenance?

Essentially, there is a tendency to miss the forest beyond the trees. To avoid that, let the data talk to you. Let it tell you the story. In the plant, domain experts make assessments based on their experience or based on their training, but in a data-driven world, that’s actually antithetical. A reframing of thought processes is needed toward a data-driven mindset. After all, if it was a matter of expertise, plants already have enough experts.

A capable AI facilitates the analysis of unusual conditions and provides improved continuous monitoring. With the right kind of data and AI that is easy-to-use, maintenance teams gain visibility into equipment variables that they previously didn’t have, resulting in various improvements beyond failure mode detection.