Data surprises in production applications of Operational AI

Key Takeaways

- The reality of operational data is far messier than its perception.

- The benefits of operational AI are highly dependent on selecting trusted data sources and having communication integrity.

- In addition to discoveries made by operational AI, digital leaders should monitor data quality and communication issues.

“Garbage in, garbage out” says that the quality of the output is only as good as the quality of the input. This is not a novel concept but it is of particular importance today where Machine Learning (ML) systems are expected to make trusted predictions and reliable detection of conditions; mistakes cost money. For example, in a Predictive Maintenance application, a false prediction (false positive) can mean slowing production to perform maintenance that is not needed. A missed prediction (false negative) can mean the opposite where an important value creation opportunity is missed.

Industrial environments are complicated, messy places in terms of the data they generate. Industrial environments do not produce the high quality data streams available to other, more traditional machine learning applications. Cause and effect can be quite distant, mediated by multiple pieces of equipment and frequently there are significant gaps in data collection between input and output. Further, even where measurements exist, they are rarely perfect. “Garbage in” can be introduced in many ways:

- Sensors

- Connections

- Noisy process environments

- Misunderstood protocols

- Assumed equipment similarities

Sensors

With the advent of IoT, there is a proliferation of sensors. These sensors are supposed to be simple to install and provide a low Total Cost of Ownership (TCO). However, even with these improvements, there are still a number of common issues to watch out for.

Sensors need recalibration from time to time. Newer sensors have “smarts” that alert maintenance personnel that calibration is needed or that automatically adjust themselves to minimize drift. However, these alerts and self-calibrations are far from perfect and should be validated regularly. In one case, an electrical submersible pump in an Oil well was reporting a current of greater than 50,000 amperes. This current, while physically possible, would have set the motor power cables on fire (if it was real it would have tripped protective breakers first). In another case, the current of one of the phases of a three phase motor was 15 amps higher than the other two phases. A condition that would have caused obvious, serious operational issues with the motor.

Also, beware of built-in “Normalization” and “Outlier Detection” algorithms. “Outliers” are important as there is no general solution to tell what is real and what is not. The causes of the outlier may need correction to prevent damage to the equipment or process. “Normalizing” the readings can also be risky. For example, in the case where the current of one phase was higher than the others, normalizing the reading could have hidden a problem with equipment grounding.

It is as important as ever to check sensor output from time to time to ensure it makes physical sense.

Connections

Just like your mobile phone, home wifi, or internet connection, communication between devices and systems are susceptible to interruptions. Many IoT devices are heavily reliant on wireless connectivity because it reduces installation costs and deployment times. However, wireless technologies, whether RF bands, wifi or cellular, are not yet as reliable or as fast as many applications require.

The lack of reliability becomes a problem when trying to make decisions in real-time. What is the intelligent system supposed to do when data stops flowing from a sensor?

- Use the last value

- Estimate the sensor value

- Assume a “safe” value

- Assume the crash landing position (just kidding)

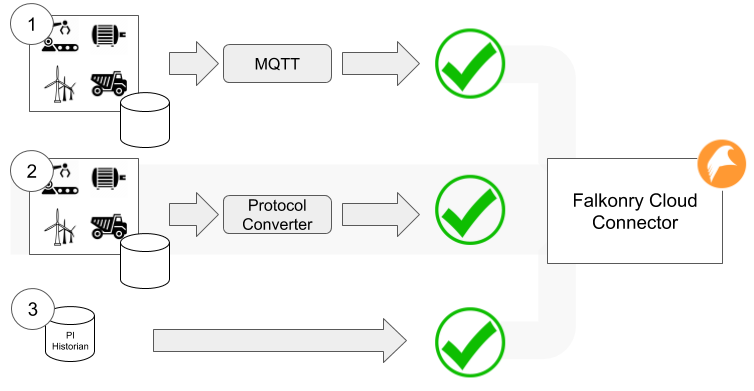

The first step in dealing with connectivity loss is to recognize that the communication has been lost. In systems based on “messaging” protocols like MQTT, it can be difficult to detect that the sensor has stopped working. For example, if the system publishes updates only when a sensor value changes, a lack of readings may be a network failure or it may be that it has not detected a change in value and hence, correctly, has not sent a new message.

Mechanisms that detect equipment health need to be implemented such that they understand gaps and take proper action. For example, the receiving system should understand “how long is too long” between sensor values based on an understanding of the sensor’s configuration. This would not only address the loss of communication but could also detect the failure of the connected device and notify the maintenance team of the event. Taking the time to define and manage device / sensor communications can ensure that garbage is not introduced and that real problems are addressed in a timely manner.

Noisy Process Environments

Sometimes, the process conditions are such that poor sensor placement or sensor choice makes readings less reliable or increases measurement variability. While this is often unavoidable, the impact of the decision should be addressed. Well-instrumented systems take into account process environments at the time of system design. Such systems are easier to use with AI compared with those where after-market sensors need to be installed.

For example:

- If the sensor is placed in a location that is noisy (air bubbles, turbulence, etc.), does the analytical model account for the increased variance in the data?

- Can a filter be applied to the measurement to eliminate noise related frequencies?

- Are the sensor and cabling properly grounded for the environment?

In particular, vibration sensors are becoming popular in Predictive Maintenance applications. In general, filtering should be avoided for vibration sensors since the high frequencies associated with vibrations should not be eliminated as being related to noise. Placement of the sensor must also be carefully considered. While less expensive and, therefore, a favorite solution of some IoT sensor vendors, placing sensors on the motor reduces the chance of detecting early vibrations that take place in the actual equipment.

Misunderstood protocols

Even with well defined communication protocols, there is still room for misinterpretation of the data contained in the messages. There are two common areas where this problem occurs:

- Interpreting data quality

- Interpreting special values

Some very common industry standard protocols, like OPC, report 3 values: Value, Timestamp and Quality (VTQ). It is important to understand the meaning of the Quality flag because it can tell about the health of various components and can be very useful in diagnosing issues. It is especially important from the perspective of Machine Learning to take advantage of this data quality information. Bad and Uncertain qualities are indicators of unreliable data. What to do in response to low quality data must be part of the system design and agreed to ahead of time. For example, if the predictive system indicates an impending failure but the data quality that prediction is based on is poor, should that prediction be acted on right away?

In one project, a customer was sending 0 (zeroes) to indicate loss of communication. While this practice is not necessarily wrong, ignoring the Quality flag can lead to undesired results as the system confuses communication loss events with real zero readings.

Assumed Equipment Similarities

One of the potential benefits of Predictive Maintenance is the ability to reuse algorithms across many assets that are alike. However, the term “alike” is not so well defined. For example, does “alike” mean that all assets have the:

- Same signals – same measured variables?

- Same sensor ranges – same operational range, calibration and scale?

- Same capacity – same throughput, batch size or operating speed?

- Same make and model number?

- Same service – same process application?

These can be illustrated with a simple balloon example. We are tasked with building an algorithm to predict when the balloon will blow up:

- Same signals: same measurements are taken for each balloon. e.g. flow rate, pressure, temperature.

- Same ranges: same flow rate, pressure and temperature ranges and scales for each balloon’s filling apparatus.

- Same capacity: All balloons are the same size.

- Same make and model number: All the balloons are made of the same material from the same manufacturer.

- Same service: All balloons are being filled with the same gas (e.g. Are we using air or nitrous oxide?) and are at the same elevation.

The point is that we cannot properly address these kinds of problems with simple maths and data science. We need to understand the differences in equipment and conditions that can lead to misleading expectations of reuse. Take the previously discussed example of the motor for which one of the phases was reporting a higher current. Is this difference an anomaly or should the algorithm assume that all motors are expected to behave that way at a certain point in time?

Conclusion and Recommendations

“Truth and oil always come to the surface.”

Spanish proverb

Everyone dealing with data is equally responsible for ensuring its trustworthiness – the operations teams for primary data and the predictive operations teams for predictions. Production systems are especially required to be self-aware and detect problems with their own reliability so that such systems can be trusted. The reliability of primary data sources is often overlooked because the data is so voluminous and systems that make predictions have a much better handle on detecting issues with signals. Therefore, such systems can better detect primary data quality issues. Likewise, operations experts should give additional attention to their primary data source quality issues to reduce delays in getting production benefits from predictive systems.

Following are some recommendations for dealing with each of the challenge areas. Note: Anomaly detection, visualization and other capabilities of AI system software packages can help to spot and understand some of these issues so that they can be dealt with more quickly.

- Sensors

- Regularly monitor the health of sensors.

- Identify and monitor calibration issues.

- Ensure maintenance teams understand sensor issues and address them.

- Connections

- Regularly monitor device connections.

- Work with vendors / integrators to understand how communication failures are detected and to agree on a strategy to deal with the gaps in the data. For example: Ensure that the frequency of reporting values is understood. What is “too long” for new values to be sent? How should a gap in data be addressed? NOTE: this may be a different time span for each sensor.

- Noisy process environments

- Identify sensor functions and their location in the process to minimize the impact of measurement noise.

- Make sure that the maintenance teams understand any issues with process conditions and sensor locations such that they try to address them.

- Protocol misunderstandings

- Work with vendors / integrators to ensure that measurement standards are clear and aligned.

- Assumed equipment similarities

- Understand process conditions and operating modes to identify what is the same and what is different enough to have an impact.