Building a Solid Analytics Foundation from Higher Technical and Lower Cultural Readiness

Key takeaways:

- Starting from a high-low stance requires harnessing the organization’s technical capabilities to empower non-data experts to serve themselves.

- Enabling operational teams to use data frees the data science team to solve the hardest problems while allowing analytics to scale.

If you’re new to this series, please read the introduction to building a solid analytics foundation before continuing.

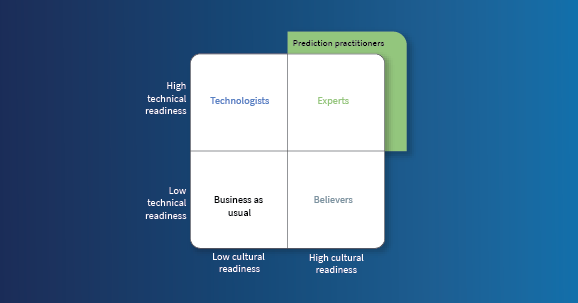

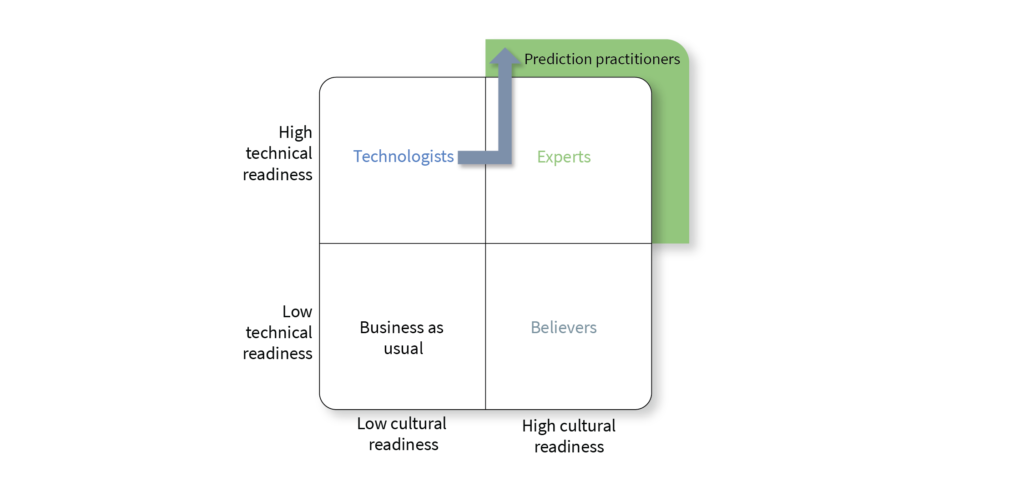

The characteristics of a high technical readiness and low cultural readiness (high-low) organization are summarized below. Remember that this isn’t a judgement, a grade or a level. This is just a convenient label for describing where an organization might start in its quest to implement predictive analytics.

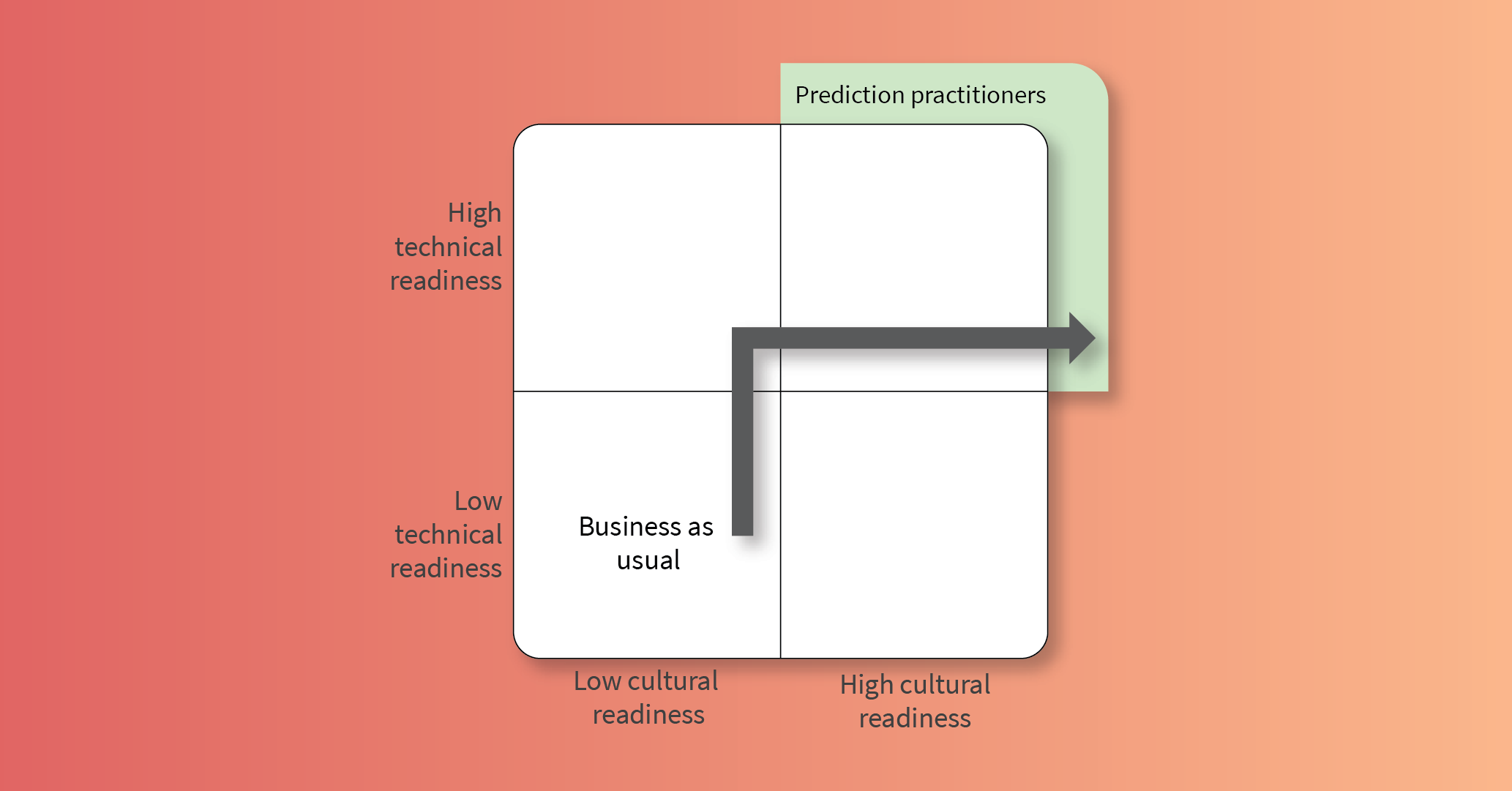

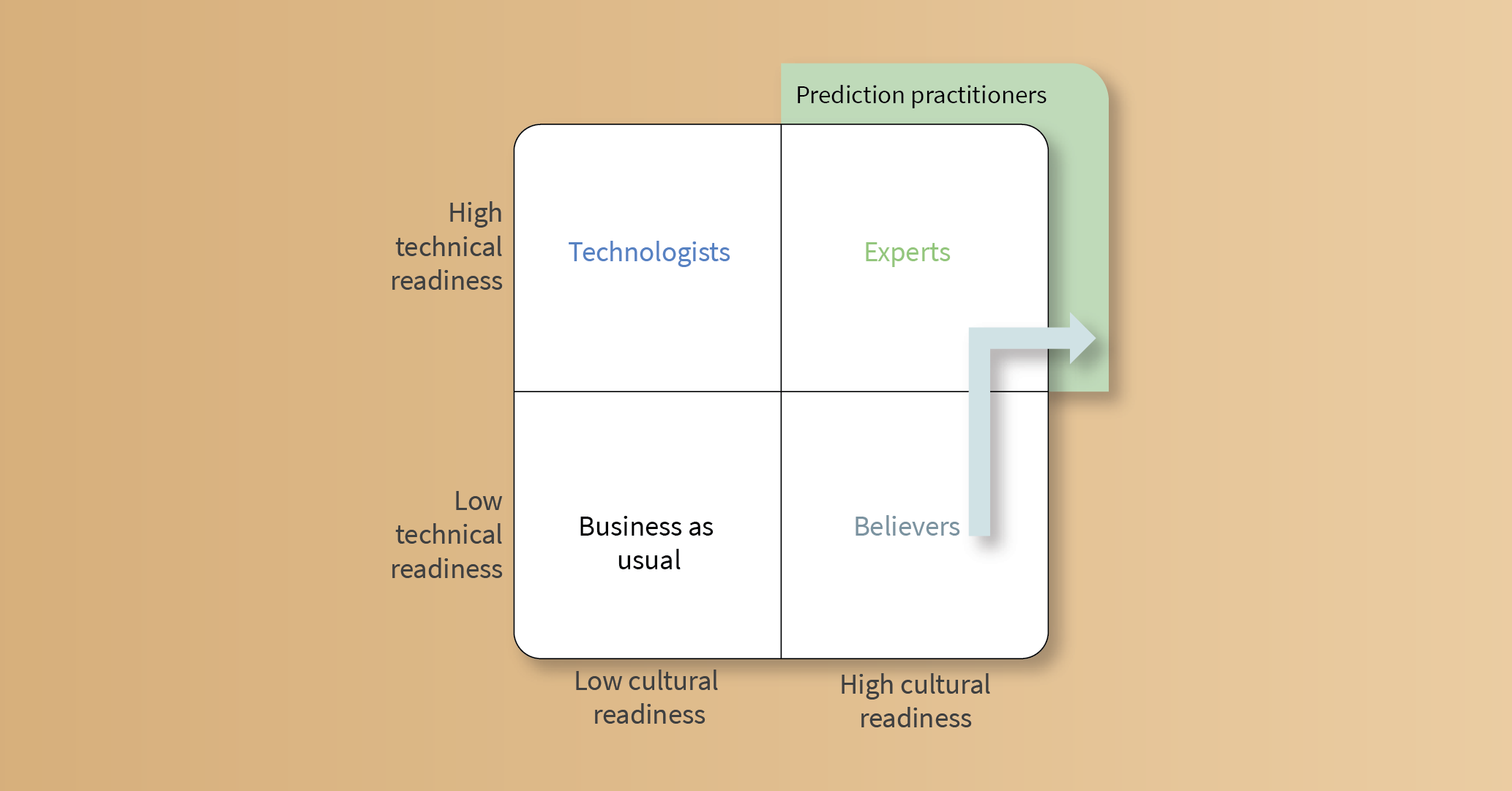

Our experience suggests that an effective path to predictive operations from this state is to leverage the organization’s technical capabilities in a focused campaign, enabling a broad base of operational users to provide daily, data-based insights. This is shown schematically in figure 1 below.

A high technical capability organization will have tools, techniques and know-how for solving complicated problems. The issue is that much of what needs to be done on a day-to-day basis doesn’t require the full power of those capabilities. While much of the hype around industry 4.0 is about applying AI to ever growing streams of sensor data, the truth is that there is significant value in implementing more mundane capabilities. For example, there are some organizations that generate vast quantities of data but whose operations teams have never seen that data. There are others that see the data regularly but who don’t ask the right questions of it, missing useful insights. This disconnect between the operations teams’ needs and data science teams’ priorities is an organizational impedance mismatch. In a low cultural readiness organization, operations want to rely on data science to do everything for them (“data isn’t my job”) but data science tends to prioritize their efforts to work on the most difficult or interesting problems (“why should I do something so simple?”). That mismatch can stymie attempts to create a culture that scales analytics to the level where it impacts overall operational outcomes. Getting past this hurdle requires two things: the right technology for the operations teams and the right system for using it.

For operations teams, the right tool for the job means analytics software that is simple to use and maintain on their own. The availability of the data science team is valuable for the special problems that will inevitably come up, but the data science team cannot be the first responders to everything that happens in the plant. That is, data science is not the right tool for this job. The data science team should help identify a set of tools that:

- Describe what equipment or processes are doing using visualization and pattern detection – especially detection of anomalous patterns of activity.

- Include a basic capability to summarize findings (e.g. compute averages, standard deviations, etc) and alert on simple rules, like thresholds.

- Make it easy to quickly generate reports that can be used in meetings. For example via dashboarding and adequate filtering or search tools to quickly arrive at the subset of data needed for a report.

Given the kinds of tasks that data scientists perform, it is entirely possible that the existing arsenal of tools will not contain something that meets these needs while also being simple enough to use – you don’t need a bazooka to hunt rabbits. Resist temptation to “buy the best,” imposing the same degree of configurability and customizability that data science experts have spent years learning to use effectively. The goal is wide-spread adoption, not perfection.

With an appropriate tool set identified, the next step is to put them into daily use among the operational teams so that their value can be experienced directly. One way to do this is to start using the tools to feed discussion during the daily meeting of an interested, motivated operational team. Set the expectation that the simplified analytics tools be used to see how sensor data and patterns in that data correlate with important OEE drivers as well as to discover patterns of behavior that they may not have seen before. Don’t start by trying to solve specific problems. Instead, take time to allow the availability of data to shape the way daily meeting conversations happen. You’ll know you’re succeeding when questions like “Did we see an anomalous pattern at the same time maintenance reported that equipment problem?” or, “I saw that pattern again yesterday, should we reroute the critical production request to avoid this machine today while we check out the system calibrations?” start to become common. This isn’t about replacing the data science team. This is about allowing the data experts to focus on solving really hard problems while still scaling analytic adoption as a whole. For that to happen, the operations teams need to be empowered to do the “simpler” analytic steps on their own. Only when they identify a problem which their knowledge or tools can’t handle should the bazookas be brought out by the people most qualified to use them. Operations teams can get there with the right expectations, encouragement and gentle guidance from the data scientists.

Initial tests should begin to show bright spots of success. Management and executives should then promote those wins within the company, spreading the message about the importance of analytics and data-driven decision making to the culture of the company. This will encourage other teams to engage, gradually spreading the practice and increasing both the technical and, more importantly, social proof that this works here. As more teams come onboard, the data science team will need to transition from being hands-on facilitators to being consultants for the process as well as resources for escalation. The net result is two fold: the organization gains a belief, backed by experience, that operations teams can and should do this sort of analytics AND that there is a capable, reliable team of experts backing up those teams when needed. No longer is data science the bottleneck – they are the enablers.

Falkonry’s time series AI was designed to be a part of the operations teams’ tool kit. Its ability to detect novel patterns and to classify previously identified patterns of interest is a cornerstone of characterizing equipment and processes. Falkonry’s explanation scoring capability lets engineers rapidly understand the source of interesting behaviors and the focus on ease-of-use makes wide-spread adoption feasible, clearing a path to realizing predictive analytics at scale.